TL;DR: If you have WoT (1.0) installed on an HDD, you may experience loading times so long that you might even miss the start of your battles. Fortunately, we can mitigate this.

World of Tanks update 1.0 was released recently, introducing a new game engine with lots of eye candy and better physics (amongst other improvements). I have been playing and enjoying this game since 2011 (when it was still in beta), as it has really solid gameplay mechanics, and I love World War 2 machinery in general1.

I was really excited for this major update, but right after installing it and playing a few battles, I noticed something very peculiar: loading into a battle was really slow, and my HDD sounded like an A-10 firing (almost). In fact, it was so slow that I arrived to battles about 30-40 seconds after they have started (and a minute late for the first battle of the day). That’s unfortunate, because:

- The fraction of my free time that I’m spending on playing video games, I prefer to actually play the games, and not wait for disk IO instead

- On open maps, spending a ~minute at the spawn location is a disaster

- With certain types of tanks, you have to contest key positions on the map, and you need to make a run for it right at the start of the battle

Moving the installation to my SSD would have “solved”2 this issue, but I don’t have enough space on it and I was curious anyway, so I decided to try and investigate.

Using sensory organs to diagnose

As I mentioned above, my HDD was extremely loud when a map was loading. WD Black HDDs (I happen to own a WD1002FAEX) are infamous for being loud, but this was on a whole new level. Loudness in itself is a very good indicator: HDDs are loud when the head moves around a lot. Aside from noise, this also makes accessing data slower, because it takes time to move the head from one location to another (this is known as seek time). Here’s a cool video for demonstration:

So the slow IO performance must be caused by excessive seeking, but there can be multiple reasons for this, such as:

- Disk fragmentation

- Reading too small chunks of data at once

- Reading from files non-sequentially

Or some combination of the above. For deeper analysis, I used ETW to record traces that contain file and disk IO information, and analyzed them with Windows Performance Analyzer.

Using ETW to diagnose

Before doing any tracing, I needed a stable baseline so I could compare results later. I saved two replays with the new client, one on the map called Lakeville, and one on Serene Coast. For measuring, I followed these steps:

- I cleared the OS file cache with RAMMap3

- I double-clicked the replay file (this makes WoT start up and load the replay and the map), and started a stopwatch

- When the map loaded, I stopped the stopwatch, and noted the time

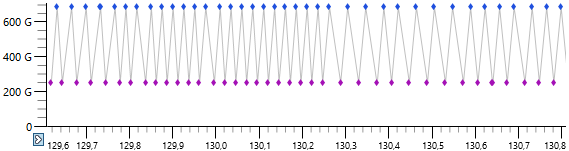

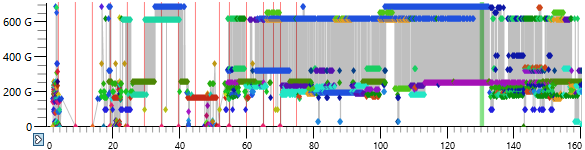

For Lakeville, here’s what I got for the Disk Offset graph, which paints a pretty accurate picture about HDD head movement4 (time is on the X axis in seconds, Y axis is the disk offset in gigabytes, each dot is a separate read, and each color represents a different file):

You can click on the image to open a wider version on a new browser tab. Even though this is a purely visual representation of data, it reveals key information:

- Points of the same color tend to be located at roughly the same area on the Y axis (with a few exceptions), suggesting that these files are not fragmented on my disk (assuming mostly sequential access)

- Points with different colors are “mixed together”, so reads of different files are interleaved

- Interleaved reads of different files (points of different color) tend to be scattered around on the Y axis

The last point might explain why the game is loading really slowly, and why my HDD is so loud: the head is constantly jumping back and forth between the ~600G and ~200G range. Here’s a closer look at the time slice located at about 130 seconds (highlighted with green on the image above):

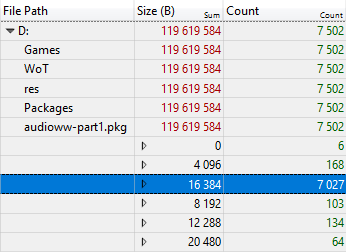

The best would be if WorldOfTanks.exe would issue read requests in a sane, more performant manner. However, this is a change only the game’s developer is capable of making (Wargaming.net), as the game is closed source. Of course, diagnosing a problem is much easier than actually solving it, but judging by this trace, there’s a lot of low hanging fruit around. Take the file [WoT folder]\res\packages\audioww-part1.pkg, for example. Reads from this file are being made during pretty much the whole loading process (azure dots on the above images), and they are mostly sequential. And yet, they are done in relatively small chunks:

Game content is stored in uncompressed ZIP files (with the .pkg extension), and as per the ZIP format, individual files are stored sequentually. It would be much more efficient to issue read requests in bigger chunks (this would also probably allow for command reordering5 to take place more frequently, potentially minimizing head movement).

I’ve looked at other files in the trace that got loaded, and It’s full of patterns like this.

What can we do about this?

Okay, so Wargaming can improve this aspect of their game engine, but what can we do about this right now? We have several options:

- Move the installation to an SSD

- Reduce the amount of data loaded

- Provide more memory for the OS file cache

- Move the relevant files on disk closer to each other

As I mentioned at the beginning of this blog post, option 1 is out of the question for me. For option 2, reducing the graphics quality or turning off music or sound completely would probably help (one of the “tips” for mitigating this loading time issue floating around the internet is turning off sound; no wonder, seeing how reads from audioww-part1.pkg spans through the majority of the loading process). However, my GPU and CPU can handle the max. settings pretty well, so I didn’t want to go with this. Option 3 is more viable. The file cache sort of acts as a firewall for bad disk access patterns, because similar to SSDs, RAM also doesn’t have moving parts.

Even though this can’t help with the first battle of the day (cold cache scenario), I gave it a try. My computer has only 8 GBs of RAM, so I tried to give more wiggle room for the file cache by closing Google Chrome and Spotify (which is also Google Chrome, technically…) before launching WoT. This did indeed improve things a bit, but I still arrived to battles about 20-30 seconds late on average. Plus, I usually listen to music while playing (and occasionally alt+tab out of the game to browse the internet), so this would have been a big tradeoff for me anyway.

Option 4 sounds good on paper. The set of files that might be loaded is well defined (and they are located in the same directory, which simplifies things), so if these can be moved close to each other on the disk, head movement will be reduced, hopefully improving our load times. I’m not sure whether the metric of the physical distance of separate files has a name, but I’m going to refer to this as relative fragmentation. Before doing any changes, there are questions we need to answer:

- How can this be achieved?

- Can we measure/prove that we indeed achieved the desired layout?

Let’s start with question number two. I don’t know of any programs that can tell us the physical layout of select files. However, we can get the data we want with this one weird trick™:

- Clear the file cache with RAMMap

- Start an ETW trace with disk IO information

- Sequentually read every byte of every file in the directory

- Profit

I used the following Python script to perform the sequential read6:

| import os | |

| import sys | |

| def usage(): | |

| print("Usage: readfolder.py [folder]") | |

| def doread(dir): | |

| for root, dirs, files in os.walk(dir): | |

| for file in files: | |

| fpath = os.path.join(root, file) | |

| with open(fpath, "rb") as f: | |

| f.read(os.path.getsize(fpath)) | |

| print("Read {}".format(fpath)) | |

| def main(): | |

| if len(sys.argv) != 2: | |

| usage() | |

| sys.exit(-1) | |

| print("About to read all files in {}...".format(sys.argv[1])) | |

| doread(sys.argv[1]) | |

| if __name__ == "__main__": | |

| main() |

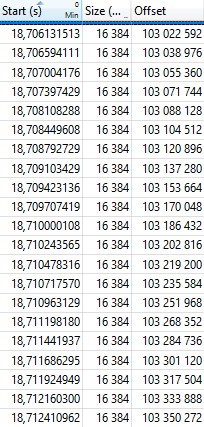

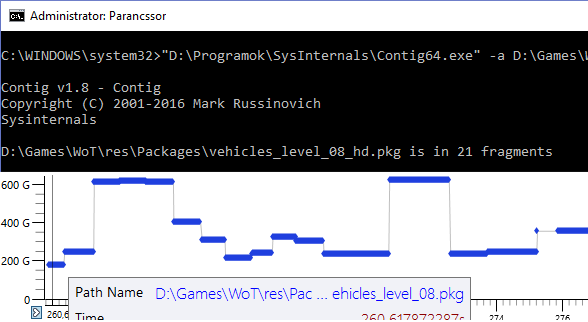

This is the result I got (once again, files are color coded):

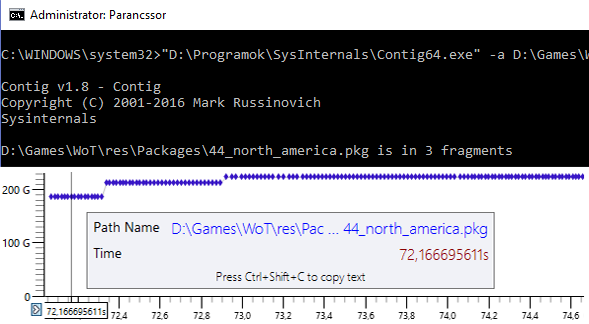

Hmm 🤔. Looks like the majority of the files are located around the ~200G range, and are mildly fragmented. There are some bigger files that are not that fragmented either, but the small number of fragments are located very far from each other (~200G and ~600G). Just for the sake of correctness, for a few files I compared this visual representation to contig‘s output, which can tell us how many fragments an individual file is in. It looked like the results were in line:

At this point, I thought to myself: “Maybe I should try regular defragmentation of my drive and see if it improves things”. I don’t know what parameters defragmentation algorithms take into account, but it would be perfectly fine if they worked on file granularity. Based on this, it’s actually possible that defragging would make my exact situation actually worse. For example, it could scatter these files on my drive, while keeping every individual file in one or a few fragments.

Windows has a built-in defrag utility, and I fired it up. Analysis told me that my disk is 0% fragmented, but I hit “Optimize” anyway (the whole process took about 5 seconds, which was very suspicious…), then reran my measurements outlined above. Guess what: I got virtually the very same “layout graph” than before.

I started to look for alternatives. I had two main candidates, contig from SysInternals, and Defraggler from the same guys that make CCleaner. Both have the capability to defrag individual folders, so I hoped they aim to minimize not just file-level fragmentation, but relative fragmentation, too. contig does not work on newer Windows 10 versions, so I was left with Defraggler. I pointed this program to the [WoT folder]\res\packages\ folder, and waited patiently…

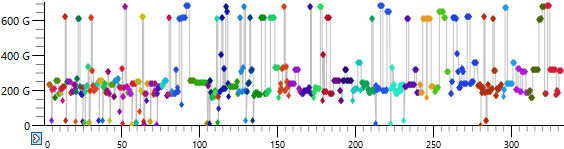

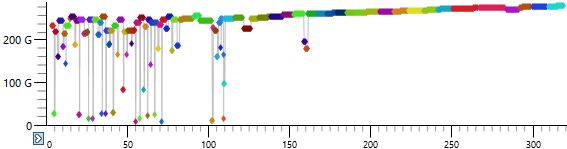

The defrag process took about 10 minutes for this single folder, which I considered a good sign (data must have been moved around). After it finished, I reran my “layout graph” measurement, and this is what I got:

“Hey, that’s pretty good!”

Even though some smaller files got scattered around, the majority of them were relocated to the 200G-250G range, so their relative fragmentation was indeed reduced. I must note, though, that my drive had ~65% free space, so Defraggler had a pretty great degree of freedom. I was excited to take some measurements to compare them to the two baselines, and see if things improved7:

| Lakeville | Serene Coast | |

|---|---|---|

| Before optimizaton | 2m 38s | 2m 50s |

| After optimization | 1m 38s | 1m 35s |

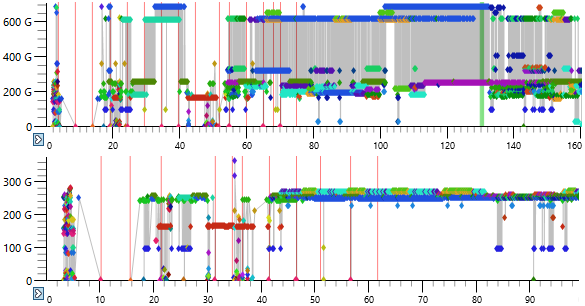

Nice improvement. For comparison, here’s the original disk offset graph for the Lakeville baseline and below the one in this improved state:

But did it solve the original problem of arriving to battles too late (the real-world scenario, if you will)? I checked this out by rewarding myself with playing a few battles after this lengthy investigation. In this optimized state, for my first battle (cold cache scenario) I usually arrive about 5 seconds late, which is much better than the original ~one minute. For further battles, I arrive 5 to 10 seconds before the battle starts. As an added bonus, my HDD doesn’t sound like it’s about to explode anymore. Hooray!

Closing thoughts

Let’s summarize:

- WoT 1.0 was loading maps super slowly, preventing me from arriving to battles on time

- My initial suspicion was huge amounts of HDD head movement

- This was confirmed by doing disk/file IO analysis with ETW: the game was doing small reads from multiple files, interleaved. Different files (and their fragments) were located very far from each other, so the HDD head had to move around a lot

- Since the game is proprietary, a workaround was needed

- Defragmenting, and – most importantly – moving the relevant files (and their fragments, if any) closer to each other on the disk vastly reduced head movement and loading times

Why isn’t every player affected by this? If you have your installation on an SSD, you are unaffected (I’ve seen some players on the forums claiming they’ve purchased an SSD solely because of this problem). If you have lots of memory, you may also be unaffected, because the OS file cache mitigates bad disk access patterns. If you have the game on an HDD, but game files already sit relatively close to each other, you might also be less affected.

If you suffer from this problem like I did, I encourage you to try this workaround, and see if it improves things.

According to Wargaming, one of the key design goals of update 1.0 was that it had to run on the same hardware than before. Even on maxed out settings, I have roughly the same FPS than before, which is pretty amazing, considering how visuals have improved. Loading times, however, regressed drastically. I hope WG will address this in an upcoming update.

If you are interested in the type of analysis I performed, I can highly recommend Bruce Dawson’s ETW video course. In part three, he performs an analysis similar to this while explaining things in much more detail than I did here.

1 Mildly on-topic: my avatar picture features the Kubinka Tank Museum‘s Panther.

2 In the same way as if your car would suddenly start consuming double the amount of fuel, and you would just strap on an external fuel tank as a solution…

3 You can’t clear the file cache explicitly, but you can make RAMMap throw away Standby pages, which is a good enough superset (the file cache uses Standby pages).

4 I’m not sure if there’s a one-to-one mapping, and I’m a bit skeptical because nowadays, HDDs tend to have multiple platters. Either way, the few sources I could google claimed that this is indeed the case, so I settled with this assumption.

5 One of the first things I looked for in the trace was how many read reorderings took place. The result really surprised me: not many. Out of ~30’000 disk operations (including reads, writes, flushes, etc.), reordering took place only about a thousand times. And the majority of them was happening at the start of the trace, where the binaries (DLLs and the EXE) were loaded by the system loader, by reading big chunks of data at once. That’s a suspiciously low number in my opinion, and I’m curious about the cause. My theory is that if the operations complete really quickly, the IO queue is almost always shallow. In other words, if the HDD receives a read request while it’s not busy performing other requests, it won’t go “maybe I should wait for other requests to arrive before starting this one”.

6 Python performed reads in mostly 2 MB chunks, that’s why there is an “unnecessary” amount of dots for each fragment. This way, however, file sizes are also visualized (the “wider” they are, the bigger the files).

7 Each cell contains an average of 3 runs, even though it was unnecesary, as for the same scenario, results didn’t vary much.

Hey, cool. I’ve added a link to this from https://randomascii.wordpress.com/2015/09/24/etw-central/

There is no guarantee that the offsets will map directly to physical distance on the disk – but it usually does, and clearly it led to a successful fix in this case. Those disk offset graphs are awesome.

Ideally WOT would read from one file and then from another for better efficiency. Or it could read larger chunks – 2 MB or so lets the disk be efficient. Or it could use a different reader thread for each file to at least give the OS the *possibility* of scheduling the reads better. So many options.

A similar problem is documented in https://randomascii.wordpress.com/2012/08/19/fixing-another-photo-gallery-performance-bug/. In this case WLPG is only reading from one file, but it does thousands of random 4-KB reads. The only fix I could find initially was to pre-read the file so that it was all in the disk cache. Now I have an SSD so I can no longer see their horrible disk I/O patterns. I’ve seen this WLPG problem with multiple SQL databases – Visual Studio is another culprit. In the SQL database problem the performance would be greatly improved if the read size was increased from 4 KB to 64 KB or greater, since eventually the cache would start working.

LikeLike

Thanks for leaving a comment, Bruce. That WLPG problem sounds familiar, if I recall, you used this for a demonstration in your Wintellect ETW video course.

LikeLike

Windows’ degragmenter is, quite frankly, terrible. Why?

Well, on XP, people would hear that defragmenting their files was good, and would sit for ages making sure all their word documents and spreadsheets were in single chunks.

This wasn’t much use because really, it doesn’t matter if a word document is in one chunk or ten, it’s still a small file that is loaded once and in isolation. People did it though because “defragmented hard drives work better”

The imagined need to do a full disk defrag (and the xp tool doesn’t let you defrag specific files like defraggler) and the time wasted by that was a negative perception. So with Vista, MS changed their tool.

Out went the graphical display. Now users can’t see what they are working with. They only get the %-age provided, and now it LIES.

Well. It tells a half-truth.

While there isn’t much value in defragging your documents, there is in defragging your OS. So that’s what it scans. Nothing else.

It’s also scheduled to run in the background and will auto-defrag when over 3%, which is why you don’t often see scores higher than that. Pretty much the only source of fragmentation is Windows update, so the tool basically just cleans up after windows update’s mess.

LikeLike

Thanks for the info, it explains a lot! I get it why Microsoft made this decision, however, I feel like there is a definite need for this “niche” functionality. They could move access to these features behind an “Advanced” button, for example.

LikeLike

Hi! Great article, it is already in WoT developers’ hands, now you can feel safe 🙂 Thanks for sharing

LikeLike

Hi! That sounds great! Do you happen to work for Wargaming? If they find it useful, it would be great if they could send some event tokens my way, I wanted to play WoT over the weekend to get the MTLS, but ended up writing this blog post instead (*wink-wink*).

LikeLike

Your method really worked, so thank you very much and to return the favour let me suggest you short stroke one of your partitions and use it for slow loading games. https://lifehacker.com/how-to-short-stroke-your-hard-drive-for-optimal-speed-1598306074 Btw, only works for hard disks

LikeLike

And also if you don’t want to / cannot reinstall your games in order to move them into your short stroked partition, try Mklink /J (/H and /D won’t work for our purposes) https://www.howtogeek.com/howto/16226/complete-guide-to-symbolic-links-symlinks-on-windows-or-linux/

LikeLike

Also don’t forget the quotation marks just to be safe.

LikeLike

I tried to do the same. My files were scattered even worse all around my 1TB HDD. With the folder defrag my load times dropped from ~2:00 to ~1:50, and when I did some defragmenting in my bootdrive and the rest of the drive my WoT folder was on it dropped further to ~1:40. The files are still more scattered than yours and I’m currently defragmenting my free space and installing WoT again to the free space to surely get it in the same range (to the outer edge of the disk as a nice bonus). One probmlem I’ve had was python giving me a MemoryError on large (1BG+) files while using the python script, even though I have a good amount of free RAM.

LikeLike

After defragmenting the free space, moving the WoT folder back, and defragmenting free space again (to pack the WoT files together instead of being scattered on the newly free part) I got my replay load times down to about 1:30. It seems like Windows keeps trying to read some files around the HDD during my testing though. Maybe caching to the near empty RAM?

LikeLike